Submitting an agent for Leaderboard version 2.0

By submitting your agent to the CARLA Autonomous Driving Leaderboard you are accepting the terms of use. This page covers submitting an agent for Leaderboard version 2.0, if you are using Leaderboard version 1.0, please consult this submit guide.

General steps

In order to create and submit your agent you should have a copy of the leaderboard project and scenario_runner. If this is not the case, please visit the Get started section first.

Index

1. Create a dockerfile to build your agent

In order for the CARLA leaderboard to evaluate your agent, it needs to be encapsulated within a docker image. For a successful one, you will have to be able to run your agent with the leaderboard inside your docker. You can either create your image from scratch, or follow the guidelines below:

-

Host your code in a directory outside the

${LEADERBOARD_ROOT}. We will refer to this directory as${TEAM_CODE_ROOT}. For ROS based agents, this should be your ROS workspace. -

Make use of one of the example Docker files available in the repository, where all the dependecies needed for scenario runner and leaderboard have already been set up. Python based agents can make use of this example Docker while ROS based ones have their Docker available here. Include the dependencies and additional packages required by your agent. We recommend you add the new commands in the area delimited by the tags “BEGINNING OF USER COMMANDS” and “END OF USER COMMANDS”.

-

Update the variable

TEAM_AGENTto set your agent file, and theTEAM_CONFIG, if it needs a configuration file for initialization. Do not change the rest of the path “/workspace/team_code“. -

Make sure anything you want to source is inside

${HOME}/agent_sources.shas sources added anywhere else will not be invoked. This file is automatically sourced before running the agent in the cloud.

Please take note that any changes you make to the Leaderboard or Scenario Runner repositories will be overwritten when you submit your entry to the cloud.

2. Build your agent into a docker image

Once the dockerfile is ready, you can now create it by running the make_docker.sh script, which generates your docker image under the leaderboard-user name.

${LEADERBOARD_ROOT}/scripts/make_docker.sh [--ros-distro|-r ROS_DISTRO]

This script makes sure that all the needed environment variables are correctly defined, then copies their contents into the created Dockerfile. These environment variables are recommended to be part of your ${HOME}/.bashrc file and the following:

${CARLA_ROOT}${SCENARIO_RUNNER_ROOT}${LEADERBOARD_ROOT}${TEAM_CODE_ROOT}${ROS_DISTRO}(only required for ROS agents)

Feel free to adjust this script to copy additional files and resources as you see fit.

You can test your docker image by running the leaderboard locally at your computer

We also want to highlight the leaderboard-agents repository, which contains several agents designed to work with the Leaderboard 2.0, along with the files needed to encapsulate them in a Docker.

3. Register a new user at Eval AI

Our partners at Eval AI have developed the user interface for the leaderboard. To make a submission you need to register a user on the website.

Make sure to fill in your user affiliation, otherwise you may not be accepted to participate in the challenge.

4. Create a new team

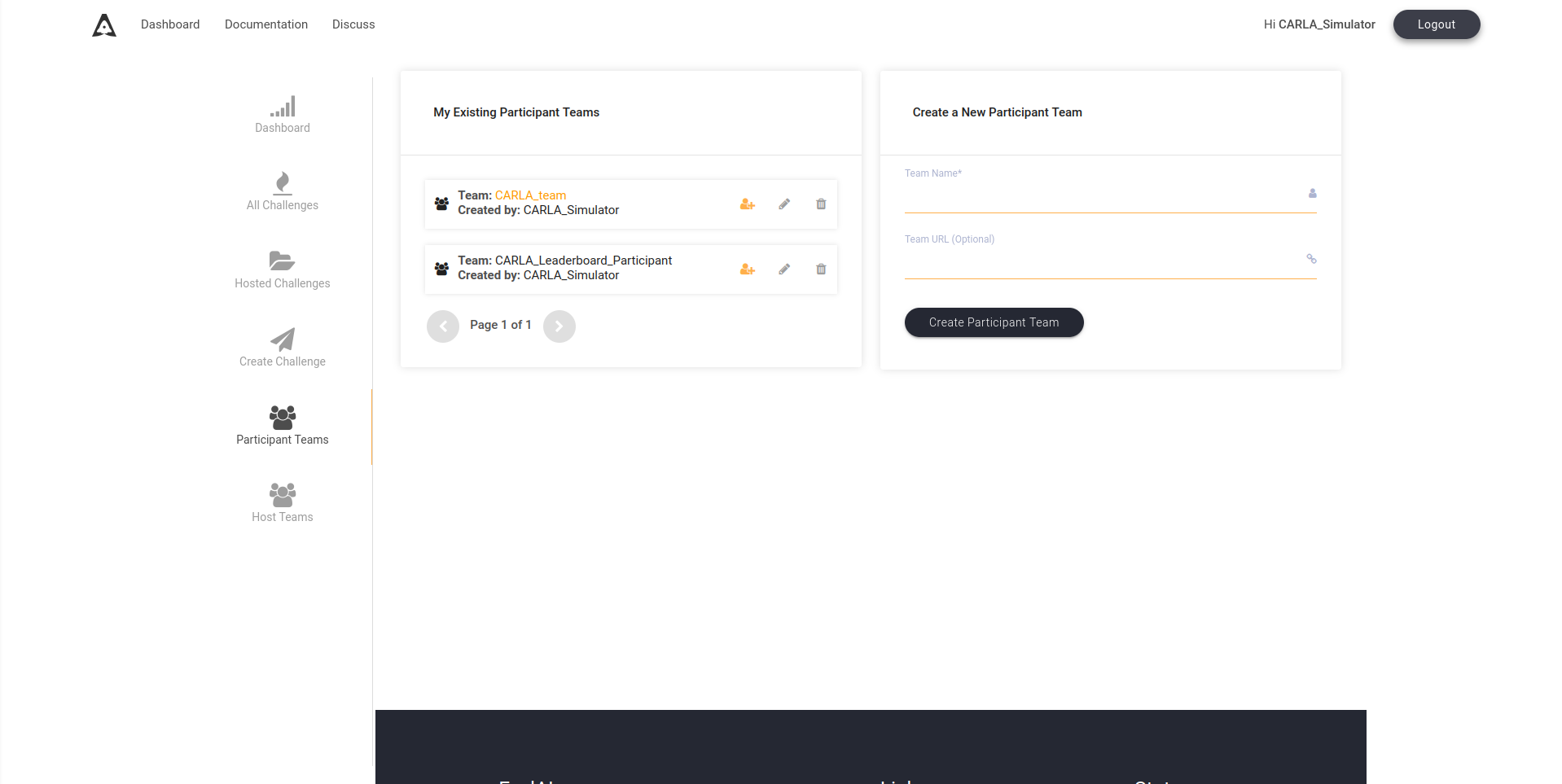

After registering a user at Eval AI create a new team by clicking in the Participant Teams section as shown below. All participants need to register a team in the CARLA Leaderboard 2.0.

Team creation screen in AlphaDrive UI.

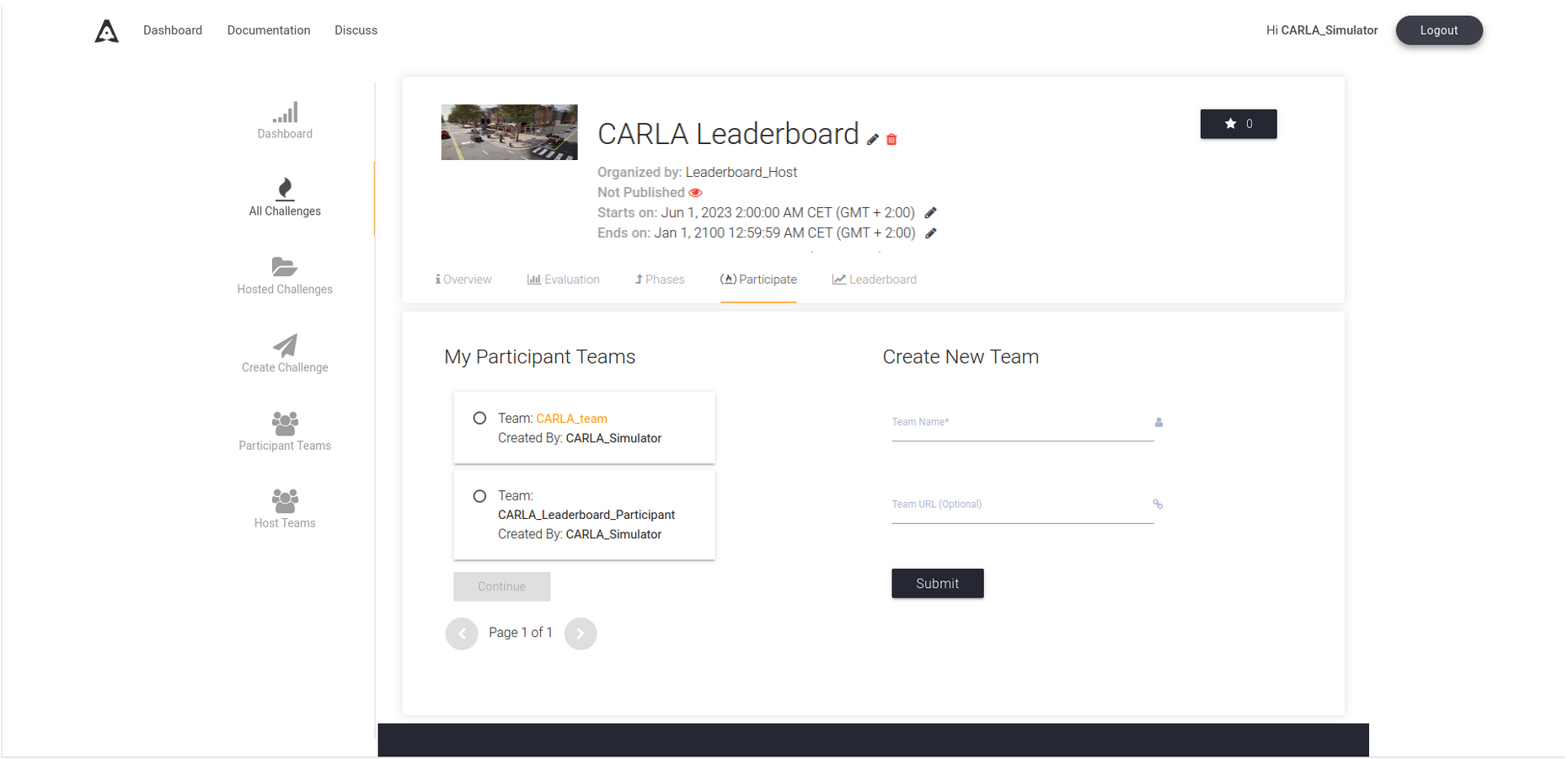

5. Apply to the CARLA Leaderboard

Go to the All Challenges section and find the CARLA Leaderboard challenge, or use this link. Select Participate, then choose one of your participant teams to apply to the challenge. You will need to wait for your team to be verified by the CARLA Leaderboard admins. Once your team is verified, you can start to make submissions.

Benchmark section.

6. Make your submission

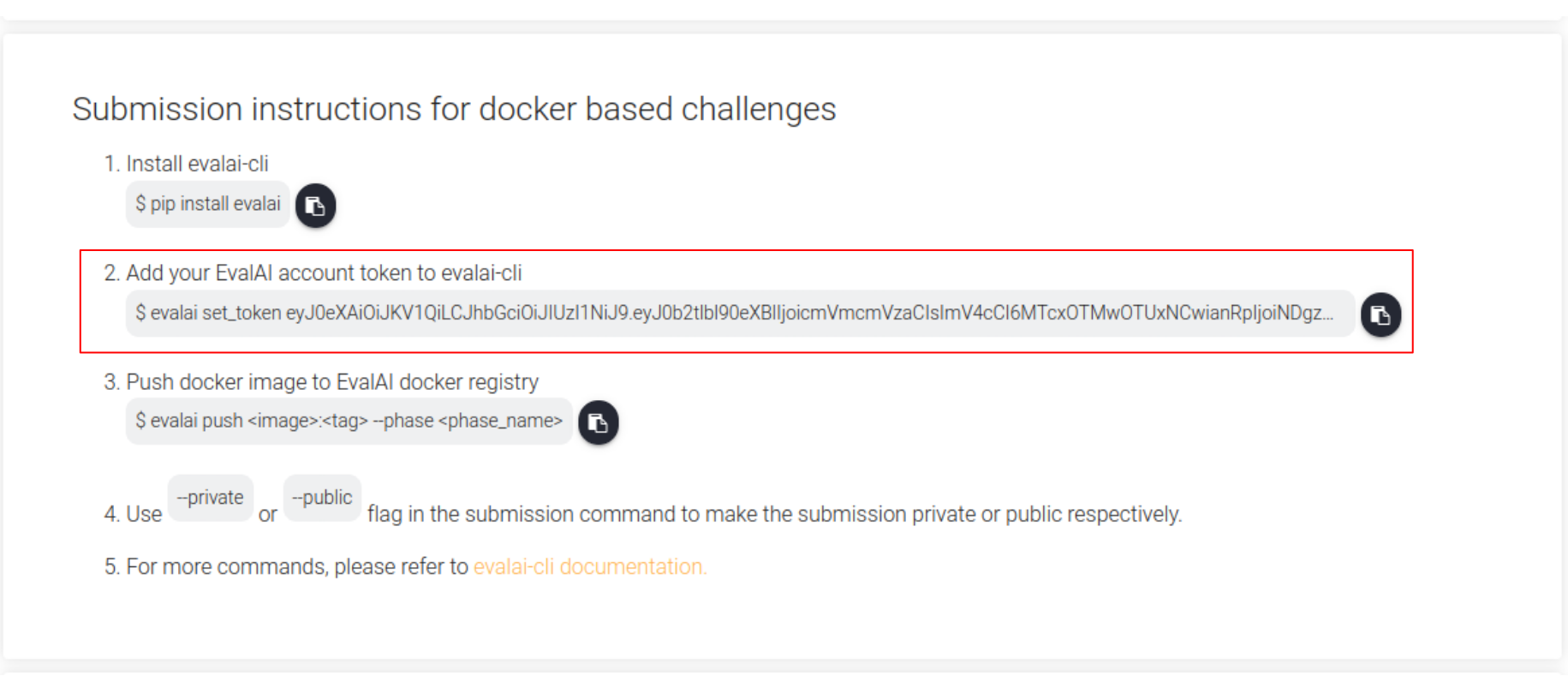

Once your team has been verified, you will be able to submit. In the Overview tab you will find general information about the CARLA leaderboard, got to the Submit section to make your submission. There you will find instructions to submit.

You will need to install the EvalAI CLI with pip:

pip install evalai

Next, you need to identify your user with your token using the following command:

evalai set_token <token>

You can retrieve your token from the Submit section of the EvalAI website. You may copy and paste the command directly from the submission instructions:

CLI submission instructions.

Please note that the token is specific to your user, each user will see a different token in this section of the website. Do not share tokens or there will be confusion over the submission author.

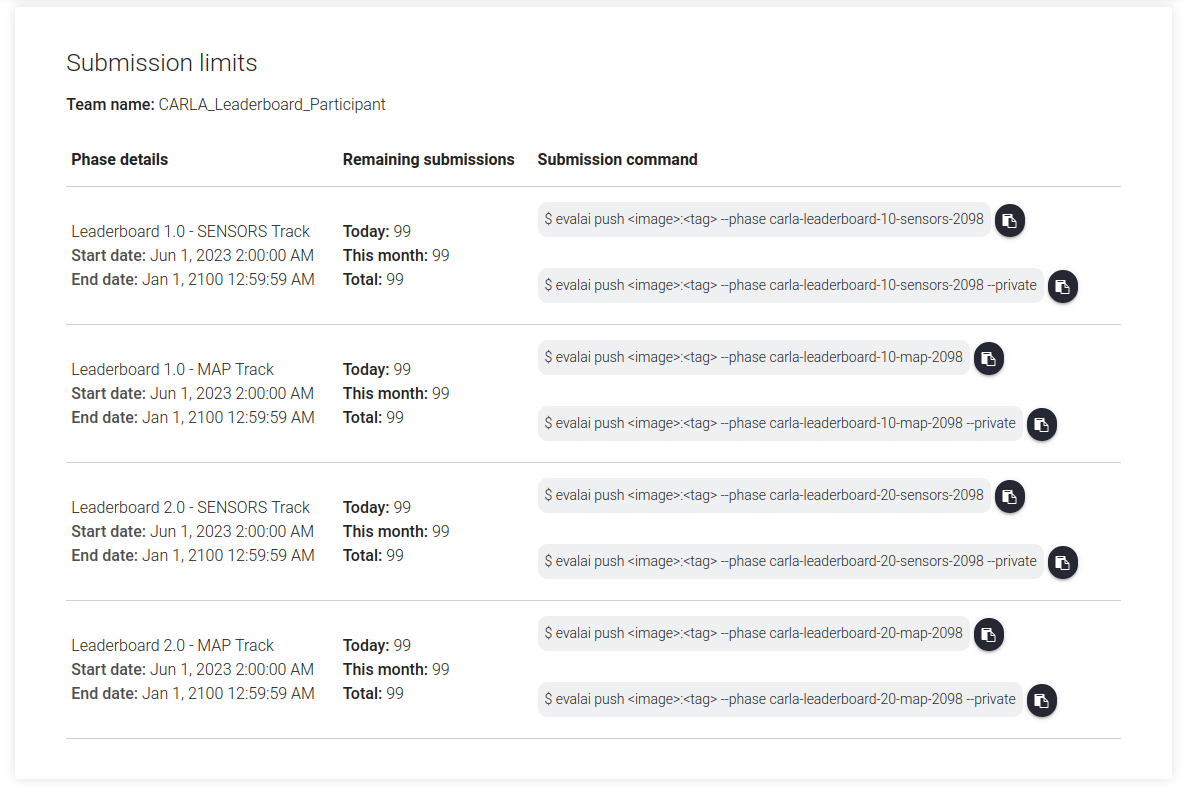

Use the following command to push your Docker image:

evalai push <image>:<tag> --phase <phase_name>

You can choose between four tracks, (known as phases in the EvalAI website):

- Leaderboard 2.0 - SENSORS

-

Leaderboard 2.0 - MAP

- Leaderboard 1.0 - SENSORS

- Leaderboard 1.0 - MAP

For the Leaderboard 2.0 tracks you must first complete the respective qualifying task:

- Leaderboard 2.0 - SENSORS Qualifier Track

- Leaderboard 2.0 - MAP Qualifier Track

NOTE: Certain tracks may be temporarily closed for the duration of annual competitions involving the Leaderboard. Keep an eye on the Leaderboard website and Discord forums to know when tracks may be closed to submissions.

You can copy and paste directly from the section below to ensure you submit to the correct track, replace the <image> and <tag> with the appropriate values for your Docker image:

CLI commands to submit to each track.

Congratulations! You have now made a submission to the CARLA Leaderboard challenge.

7. Check your submission data

Once the submission has been created, you can go to the My Submissions section to see the details of all your submissions.

The status shows a high level explanation of the state of the submission. All submissions start in the SUBMITTED status, and will change to PARTIALLY_EVALUATED once they start running in the backend. Once finished, it will change to either FINISHED, if everything went correctly during the simulation, or FAILED, if something unexpected happened.

Submissions that have failed can be resumed, which will continue the submission without repeating the already completed routes. These will have the RESUMED status instead of SUBMITTED until they start running.

At any point, users can cancel a submission, stopping it and changing its status to CANCELLED.

Any submission can also be restarted, creating a new one with the same submitted docker. If the submission was running, it will automatically be cancelled.

In order to get the results of the submissions, go to the link provided by the Stdout file. This file includes some general information about the submissions, including the sensors used, route progress, and final results, as well as a glossary of the status and performance of each route.

Lastly, if you want to make the submission public, check the Show on Leaderboard box, and it will now appear in the Leaderboard section of the challenge. Feel free to edit the submission with any information about the method’s name, description, and URL to the project or the publication.